DYNAMIC POPULATION ENCODERS

Dynamic population encoders

author: Tofara moyo

The human brain is an amazing machine. It is composed of billions of neurons , exchanging information (and adapting the ways they exchange information) in order to learn and process information that the individual receives.

We know that neurons have a binary , on or off nature, and it is in the pattern of neurons firing and not firing that consciousness emerges. We assume that population codes are the way that the brain chooses to represent concepts rather than other theories such as grandmother neurons.

The population code theory is that concepts are represented in the brain by groups of firing neurons, rather than individual neurons , which if it was individual neurons, would imply the hypothesis that grandmother cells are the way the brain encodes information. What this means is when you think of the concept “cat”.

Each time you think it roughly the same group of neurons will fire in a specific part of the brain. Also when you perceive a cat, similar neurons will fire with each instance of a perception of a cat.

This applies to the processing mechanism found in brains too. When you perform mental manipulations certain groups of neurons will fire each time you perform a specific mental manipulation.

Recall that the information contained in the pattern of firing of neurons in an individual shows his thoughts and thought processes. Or his mental life. .

Consider the following thought experiment. We shall have a subject whose brain will be the focus of the experiment. Next we get a grid of cells that can light up and off.

There will be one cell in the grid for each neuron in the subject's brain and whenever a neuron is firing in his brain the corresponding cell will light up and turn off immediately after..

If we were to run this experiment we should see a pattern of lights turning on and off on the grid. Since there is a cell for each neuron in the subjects brain, clearly during the experiment the population codes in the grid are isomorphic to those in his brain.

In fact this pattern of lights has enough information in its distribution to know all the mental processes of the individual.

We now look at cellular automata.-A cellular automaton consists of a grid of cells just like the one in the experiment. It may have the following rule for deciding which cells light up and turn off. If a cell lights up. When it turns off the cells that are its neighbours light up. This simple rule can create quite complex patterns in the lighting though of course the patterns have nothing to do with a mind.

A mind would be more aptly modeled by a type of automaton called a networked cellular automaton. In this automaton cells may be connected in such a way that when a particular cell lights up , when it turns off, a cell that is not its immediate neighbour may light up next according to the rule. This is because of the networked nature defining neighbourhood.

if we return to the experiment we performed above, notice that if we had the right connections in a networked cellular automaton that mimicked the firing of the grid during the experiment we would clearly by hypothesis have the same information that was in the mind of the subject.

if we had this automaton we could connect it to a robotic system and have it control the robot with the same dispositions as the subject. One point is that learning will have to be performed by changing connections in this networked automaton,since the pattern in the subject's head changes as he learns.

So a reward system would be connected to the robot. Then we would somehow alter specific connections in order to maximise net cumulative reward and hence learn.

We would like to come up with such a robotic system, but it would be a tall order to try and mimic the connectivity of someone's brain with an automaton. we shall instead initialise all the connections within the automaton randomly then adjust them in such a way that it increases the net cumulative reward of the system.

That way once it has learnt to increase the net cumulative reward, it has built a pattern of connections that contains the disposition to control the robot appropriately.

So instead of a grid we should use more formal language now such as a family of nodes. We could then initialize their connections randomly, but we shall opt for a more sophisticated approach.

We shall have all the nodes suspended in 3D space with no connections connecting them whatsoever.

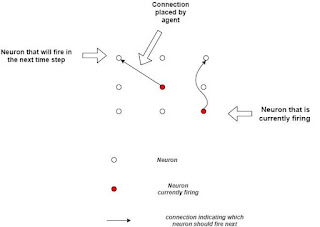

then when some of the nodes fire, our algorithm will place connections between those that fired and those that are meant to fire next. Then when the ones that fire next fire, the cycle repeats.

We still are yet to design this algorithm so that it gives us the appropriate connections at the right time. note that the robotic system is an agent. but also more subtly the algorithm that establishes the connections in the automaton is also an agent.

Just one with no actual body. It is involved in mapping the input to the robot to a set of connections that it sets up rather than the robot , which maps the state it is into physical actions.So the act of setting up connections becomes the algorithmic agents actions. and since it can connect the cells in potentially many different ways, its set of actions is the set of all possible ways to form connections between nodes.

The rule that the algorithmic agent uses for establishing connections must be its policy then and exploring new state action pairs involves creating pathways that it does not believe are the best choice given its policy.

This realisation makes it clear how we shall arrange for the networked cellular automaton to learn to be connected. We shall learn how to connect it using Q learning. Specifically deep q learning. The input to the Q network will be the state that the robot is in, and the output will be a list showing which connections to place on specific nodes.

As it is it is very impractical. there may be far too many connections needed to make one agent learn how to coordinate their setup. Rather we will split the network into groups of nodes with an algorithmic agent assigned to each group. In order for this to work each agent must know what is happening in the other networks. So we will include the state of the entire network in the input to each agent's Q network. ultimately the different agents will learn to cooperate for the greater good of the system, which is to increase the net cumulative reward that it receives.

There are more savings. we will use only one q network and a one hot conditioning vector with a dimension per agent as input.Then when we are predicting agents for a particular agent we condition the input to the q network with the one hot encoded vector.

If we trained a robotic system using this regimen we should expect there now to be some structure within the automaton. this would include long and short term memory as well as processing capabilities, conceptualisation and everything that the previously mentioned subject could do.

Here is an example of the system in play.

The example we will use will be that of a robot “hurting its hand” and receiving a negative reward as a result.

First the state of the system will be parsed to the algorithmic agents Q network. Then conditioned on it, it will deterministically add connections between the nodes that are currently firing and the ones that it thinks should fire next given its policy.

If that action caused it to receive a negative reward, the Q network will learn that that particular action, or set of connections was the wrong ones to place and that policy that led to the occurrence of those nodes that fire next in that situation will be changed.

So learning adapts the sequence of the firing of nodes in order for the occurence of the robot hurting its hand to not recur. It does this by changing the policy of the algorithmic agent and hence which connections will be placed and ultimately which neurons will fire next

Another example is to show how the population codes for a cat will show up once the robot sees a cat.

In this case since the state is passed to the algorithmic agents Q network. It will use this information in order to determine which connections to place between which nodes.And so determine which ones will fire next.

Presumably if it sees another cat roughly the same connections to nodes that fire next will occur. Those nodes that consistently fire next after the presentation of a cat will be the population codes for a cat.

Comments

Post a Comment